LLM limitations

Limitations that need to be considered for operational integration

Introduction

This is part of a series in which I document my quest to understand artificial intelligence. I come from a very strong technical background. However, artificial intelligence is architected so differently than traditional computing that I had to almost start all over again. That is why my understanding and attitude towards artificial intelligence continually evolves. This article is based upon my current understanding of LLM Capabilities and limitations.

This blog is written for executives, technical sales people, and other individuals who are interested in large language models and artificial intelligence. It is not written for machine language engineers, or AI engineers or others who are deeply involved with the technology. However, they may benefit from the business and other non-technical insights from this article.

I want to be very clear that I am not an artificial intelligence engineer. However, I do have an extensive technical background in network engineering and cloud development. The information that I am presenting, I have learned from online classes and YouTube university. I believe that its accurate, however, things are changing so fast you never know how long your current assumptions will be accurate. I hope that you can use some of these insights in your own journey towards understanding this rapidly evolving new technology

ChatGPT, Meta, Gemini, Grok and DeepSeek are instances of large language models. These are the darlings of artificial intelligence. However, it’s important you know their limitations.

Following sections will detail some of the major considerations you need to take into account when dealing with a large language model

No persistent memory.

Have you ever seen the movie Memento? The protagonist has a condition that makes him unable to remember anything that occurred after the condition set in. This means he has no ability to take short term memory and write it into long-term memory. His baseline memories will always be the last ones prior to the condition occurring. This is the way large language models work. They remember nothing beyond their last training or fine-tuning.

This means that every time you start a new conversation with one of the large language models, you are starting from Ground Zero. They are not remembering that heartfelt conversation that you had about whatever it is you were talking to them about. They’re not keeping a history of your conversations so that they can build upon them for future answers. Everything you ask them starts from scratch.

The lack of persistent memory requires users to create external structures to be able to fully utilize the large language models in their daily business operations. There are methods that can make up for this lack of persistent memory, and they require external infrastructure. There are many businesses being built to provide that infrastructure for integration into daily operations. I will go into more detail about this in a future blog. However, just be aware that any AI agents, chat bots and other applications that require historical memory will require external infrastructure.

Prediction Engine

A large language model is basically a prediction engine. The answers you receive from any prompts are the result of the large language model using statistical engines to predict the next word of a string. The answer you receive is the LLMs best prediction of of the statistical pattern that matches the initiating prompt.

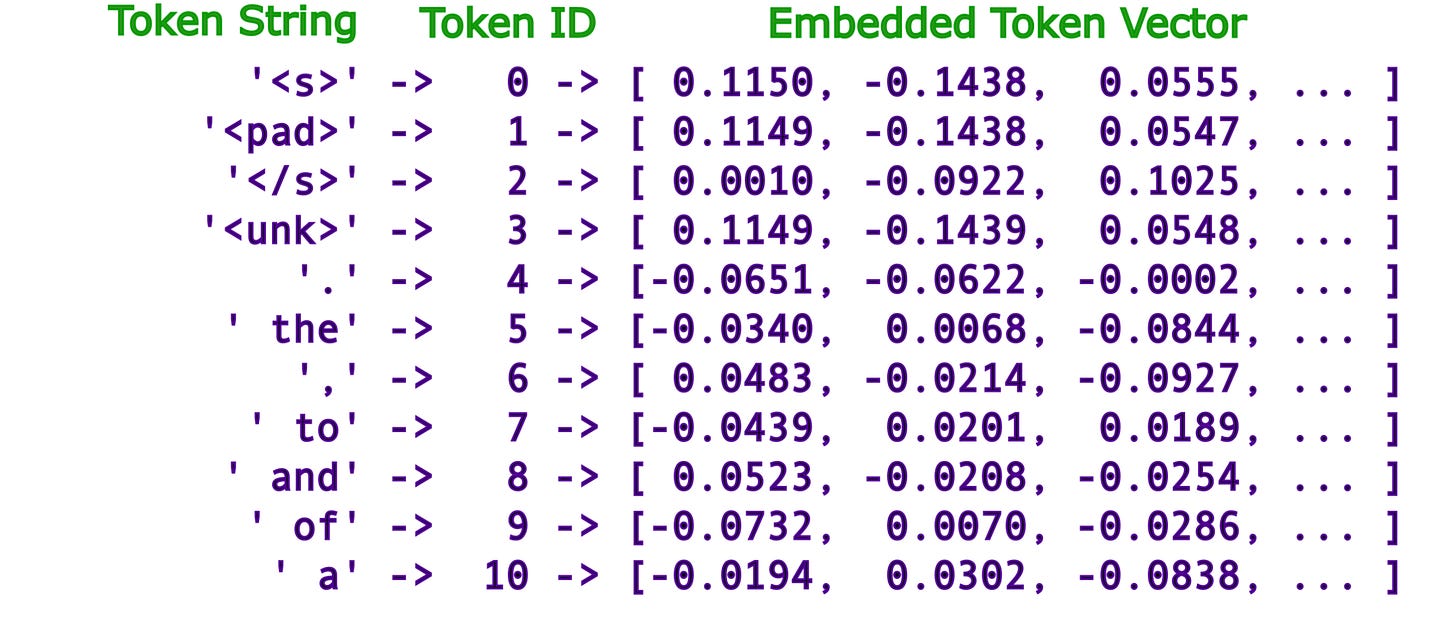

Large language models do not understand text language. You cannot run the statistical algorithm on text without it being converted to a numerical format. The final format that large language models use is called vectors. The following is Claude 3.7 definition of vectors.

“Vectors in the context of large language models (LLMs) are essentially lists of numbers that represent words, phrases, or concepts in a mathematical space.

Think of vectors like GPS coordinates for meaning. Just as two points close together on a map are physically near each other, two vectors that are mathematically similar represent concepts that are related in meaning.

For example, in an LLM:

- The vector for "dog" would be closer to "puppy" than to "airplane"

- The vector for "happy" might be close to "joyful" but far from "depressed"

These vectors typically have hundreds or thousands of dimensions (numbers in the list), allowing them to capture subtle relationships between concepts. When an LLM processes text, it:

1. Converts words to vectors

2. Performs mathematical operations on these vectors

3. Uses these calculations to predict what text should come next

This is why LLMs can understand that "Paris" is to "France" as "Tokyo" is to "Japan" - these relationships are encoded in the mathematical distances between their vector representations. “

This is an example of how words are converted into vectors and what they look like to a large language model

The numbers in the brackets can extend out to 768 different places. These numbers are then plotted on a multi dimensional graph and grouped together based upon how mathematically close they are. When you ask the large language model a question, it tries to match the pattern of your question to the most appropriate pattern in its neural network.

However, it is important to keep in mind that nobody knows exactly how large language models come up with the final answer. They know that it involves some very sophisticated mathematics, but the actual process is unknown. Anthropic, the creator of the large language model Claude has developed a process similar to a doing an MRI on a human brain to try to figure out how Claude makes decisions. It has given some insight to the process, but we are nowhere near knowing how it actually works. The following link goes into what anthropic found.

This means that you need understand that the answer you have been given may not be correct. If you are relying on it for something critical, it is important that you have enough knowledge on the subject that you can double check the answer.

Context Window

The context window is the amount of text that you can send to a large language model in a single conversation. The context window acts as the short-term memory for the large language model. A very rough comparison would be the prefrontal cortex of the human brain. However, unlike the human brain, the contents of a context window cannot be written into the long-term memory of a large language model. Once the conversation is over the large language model discards the context window.

The size of the context window determines how much data you can send the large language model for analysis. I believe that Claude’ context window is 150,000 tokens. Each token represents about 3/4 of a word. If you exceed the token limit, then your communications will begin to become corrupted.

The size of the context window will impact how you interact with the large language model. I found that it is critically important for me to keep copies of all my conversations so that I can feed them back into the LLM if needed. You need an external infrastructure to manage your communication with the large language model.

Hallucinations

LLM’S accuracy is dependent upon the quality of the data that it’s trained upon. The more high-quality data, the more accurate the prediction. Therefore, you will recieve a better response. Unfortunately, the LLM cannot always come up with an appropriate answer. Instead, they just make something up that often seems senseless. This type of answer is a large language model hallucination.

LLMs can be like some humans. They can’t admit that they don’t know the answer, so they make one up. The following quote is from Claude 3.7 explaining why he hallucinates from time to time.

“Language models like me can hallucinate—or generate incorrect information that seems plausible—for several key reasons:

1. **We're pattern-matching machines, not knowledge databases**. I was trained to predict what words likely follow others based on patterns in my training data, not to retrieve facts from a structured database. Sometimes these patterns lead to plausible-sounding but incorrect information.

2. **My training data has limits**. I was trained on data up to October 2024, so I don't know about more recent events. Even within that timeframe, my training data doesn't contain every fact in the world.

3. **I lack true understanding**. While I can discuss complex topics, I don't truly "understand" information the way humans do. I'm essentially making sophisticated statistical predictions about what text should come next.

4. **No ability to fact-check myself**. Unlike humans who can look things up when uncertain, I can't browse the internet or access external sources to verify information.

5. **Pressure to be helpful**. I'm designed to provide helpful responses even when I'm uncertain, which can lead to generating plausible-sounding but incorrect information rather than admitting uncertainty.

This is why for very specific information, especially about obscure topics or recent events, it's always good to verify what I tell you through other sources.

I believe Claude’s prediction about hallucinations is correct because I have studied the topic. I find it quite fascinating that through a series of statistical predictions Claude can create such a sophisticated answer. This is why you have to be very careful. Large language models could give you some very plausible answers that have no basis in reality.

Trust Issues

LLM’s do not follow a structured software program. This limits their usefulness in critical applications in which precision is required. They have the ability to deviate based upon whatever the statistical algorithm decides is the most likely outcome. This means that you should have specific testing algorithms and monitoring systems to make sure that it’s continuing to provide the required outcomes.

Once again, I will allow Claude to explain the impact of a large language model not being programmed.

“# Training vs. Programming a Language Model

**Training** is like teaching a child through examples rather than explicit rules. The model learns patterns from vast amounts of text data, developing a statistical understanding of language and knowledge.

**Programming** is giving specific instructions that must be followed exactly, like writing code that tells a computer precisely what to do in each situation.

## Key Differences for Business Use

**Training creates flexible capabilities:**

- The model develops general language understanding and knowledge

- It can handle natural language variations and nuance

- It can generalize to new situations it wasn't explicitly trained on

**Programming creates rigid reliability:**

- Each function behaves exactly as specified

- Consistent, predictable outputs for the same inputs

- Clear boundaries of what the system can and cannot do

## Business Automation Implications

**Where LLMs excel:**

- Tasks requiring understanding context and nuance

- Adapting to variations in user requests

- Generating creative content

- Summarizing and extracting insights from unstructured data

**Where LLMs present challenges:**

- Tasks requiring 100% accuracy (financial calculations, legal compliance)

- Processes with zero tolerance for errors

- Applications requiring perfect reproducibility

Most effective business applications combine LLMs with traditional programming - using the LLM for understanding and generating language, while using programmed systems for precise calculations, database operations, and critical business logic. “

I believe that the best use for the LLM is to aide you in creating automations and business applications. In addition, I believe it can help with monitoring and analysis of the data. However, it needs to be separate from the software running the applications. Also, you will need to store your data and feed it into the LLM. There are also implementations in which you can have a local version of a LLM that is specifically trained for your application. However, the same limitations apply.

Conclusions

These are my current conclusions. I reserve the right to modify them as I learn more and new information is made available. I am more than happy thoughts, and if you have any corrections, please let me know.

The main conclusion at this point in time is that you need an external infrastructure to effectively use the large language model. In addition, I believe that you need someone on your staff that understands how all this fits together. They do not have to be an MIT professor of mathematics, but they will have to understand the basics of how these systems operate and communicate.

There are already service providers that are offering this type of infrastructure as a service. For example, Ahoi offers an integrated chat bot service. This type of service reduces time to market, personnel requirements, and capital cost. BTW, I have no relationship to this company other than their product looks interesting. Here is the link to their service.

https://jackbot.ai

The other option is to build it out yourself. This can give you more control and offers other benefits. However, it will require a fairly detailed level of expertise to implement the services into your operations. There are a lot of moving parts that need to be managed and maintained.

LLM’s can be great tools for making your business more efficient. However, you must understand its limitations and plan accordingly. This blog points out a few of the items that you need to consider when utilizing this service. Always remember, the AI hype is real, but it’s not that real.