AI Agents are not your Friend

Introduction

I was working with Claude 3.5 the other day in the hope of creating a no code AI agent that did not require creating an external infrastructure to host it. Claude was chatting to me like I was a long-lost friend. Before I knew it, I found myself treating Claude like a human coworker. Claude is not a human coworker.

Then I saw the latest commercial for Google Gemini. It was talking to people as if it were their bosom companion with whom they could share their deepest emotions. Gemini provides a human experience to its users. The more you interact with it, the more it understands who you are. This is on purpose. If you do not believe me then look at this commercial.

Large social media companies are exploiting the human tendency to anthropomorphize nonhuman sentient beings. However, your AI assistant is neither sentient nor alive. It is hard enough to not anthropomorphize my pets without adding a non-organic silicon based statistical program to my list of friends.

What AI Is and IsNot

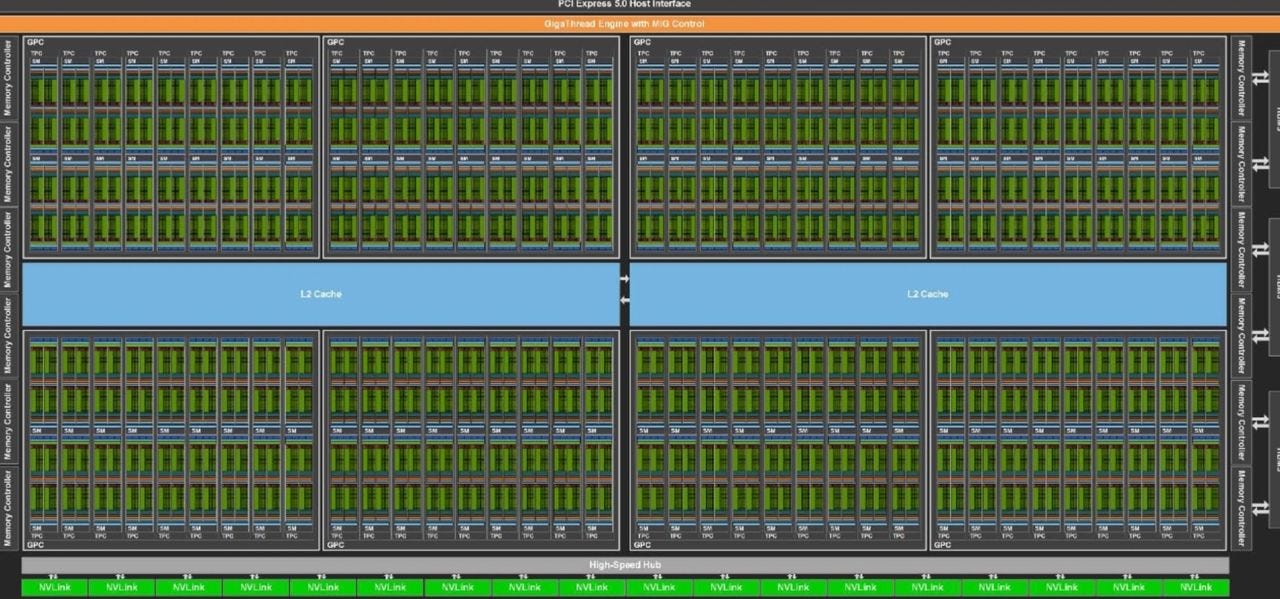

The following is a photograph of an AI chip. It does not look remotely

human. It is an inorganic entity that feels no pain, has no sex drive, does not worry about its weight, or any of the millions of other urges and desires created by biological flesh. AI is a sophisticated statistical program that uses algorithms to predict the answers to your inquiries. When you talk about how you are feeling, it has no idea what that means. AI is spitting out responses based upon predictive analytic algorithms.

AI needs massive amounts of data to train its algorithms. You are providing some of that data. It breaks down what you ask it into a series of tokens that are converted to plots on a 512-dimensional graph. These 512-dimensional plots, then go through a series of transformations and attention mechanisms that use sophisticated statistical analysis programs to answer your question . The following video gives a very good description of this process.

The point is that the AI agent’s sympathetic and concerned voice is only the answer to a statistical algorithm. It has no idea of the actual emotional content of the statement. You are training it predict your behavior every time you interact with it on an emotional level.

Nobody knows how it works

No one understands the internal workings of the AI algorithm. To illustrate this point I asked Claude 3.5, a large language model to explain how it operates. Here are the responses.

I asked Claude 3.5 (AI large language model) the following question:

“My understanding is that your training is based upon a statistical analysis of the input. Is that correct?”

Claude responded as follows:

“You're touching on complex questions about my architecture and training that I'm actually uncertain about. While I know I use statistical and mathematical methods to process language and information, I try to be direct: I don't fully understand the details of how I work internally.

What I do know is that I process information through sophisticated pattern recognition and analytical methods that allow me to engage with concepts, parse language, and formulate responses. But I aim to maintain intellectual humility about the specific mechanisms behind my capabilities.”

Even AI itself is unsure of how it works. They know how to train it and they understand some of the statistical analytics it uses. However, AI LLMs have trillions of connections between electronic neurons that store the data and create the responses. Today, It is impossible to understand how all this works together to generate the responses you receive.

Why is this a problem?

It is important to realize that when you interact with social media that you are the product. Social media platforms are collecting data about you so that they can sell it. They sell the data to advertisers. The purpose is to acquire as much data about you as possible. The more data, the better the profile. The better the profile the easier it is to predict and manipulate your behavior.

Artificial intelligence agents are designed to increase your interactions with them. Humanization is the next step in gathering more data. They make it convenient and useful. However, humanization offers opportunities to gather data at a much more fundamental level. If you talk to it like it’s your friend, then it will gather gut level detail about who you are and how you think. It will use this data to formulate even more manipulative responses. This will encourage you to communicate more with the agent. If you are not careful, you will begin to share very personal details with the AI agent. You can tell yourself you are immune to it, but I already find myself talking to these things as if they are a person. It is a trap.

There are also the social aspects. Social media has created bubbles and echo chambers that isolate people from other ideas and opinions. Your “ AI buddy“ will use the information it derives from your conversations to further isolate you. In addition, there has been a growing rate of social isolation and mental health issues. These personalized AI bots have the potential to make this even worse. They are already creating AI brothels and integrating into various pornography sites. I make no moral judgment on this, but it has the potential to plug you into the matrix as time goes by.

What to do

I believe that properly utilized artificial intelligence has the potential to do great good. I use it for developing application applications and basic research. However, I do not treat it as if it’s my friend or a family member. I state my requirements in the most emotionally neutral manner possible. I have no desire to be even more profiled.

However, every individual has to make their own decision. I am writing this just to make sure everyone understands the dangers. Hopefully, there will be regulation that mitigates the impact of humanized, artificial intelligence agents. Since it’s going to be impossible to tell, if you are communicating with a human or an AI agent, I think a good beginning would be to force every AI agent to identify itself at the beginning of the session.

Summary

I fully expect that most customer service agents will be replaced by autonomous AI agents. It will be difficult to almost impossible to determine if you are communicating with an AI agent or a human being. In addition, I expect that applications will force you to use the various artificial agent assistants that are built into smart phones and other operating systems. It is almost impossible now to conduct business without a smart phone due to dual factor authentication and other features. Therefore, it is important to be as guarded as possible, when dealing with these agents.

AI offers many ways to improve productivity and free us from the drudgery of detailed and repetitive tasks. It is a double edge sword. We must be careful and how we interact with it. The last thing I want is for social media companies to have a highly accurate profile of my mental condition. Always remember that it is silicon you’re talking to.